Machine-learning for 10-years-old

Introduction

Machine Learning methods are ways to teach computers to learn from data without receiving explicit instructions on how to do so. Unlike us getting all the knowledge from university professors, computers will learn by trial and error. In this post, we will look into two of the most advanced and widely-used classification models in machine learning: Decision Tree and Random Forest. However, don’t freak out if you are not a data science person, since we only need you to be a cat person! Or at least know what cats look like :)

Decision Tree

What is Decision Tree?

Trees? Yes, trees. How could a tree possibly make any decision? Well, we are not asking trees to make decisions for us but trying to have us make decisions like trees.

We know trees have lots of branches and each branch will divide at some point into more branches. In a decision tree, a point that splits branches is called a ‘node’. At each node, the computer will make a ‘Yes/No’ decision based on certain criteria to categorize data. Finally, with multiple nodes connected to each other, a tree could make conclusions in a way that a human would usually do.

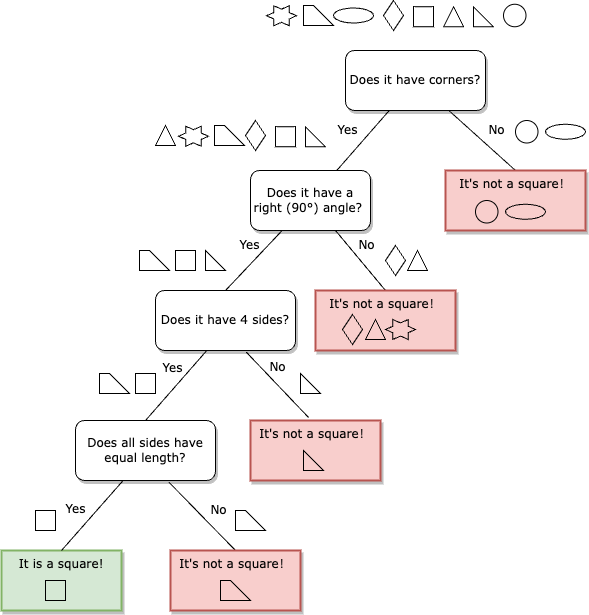

For example, to identify squares from other shapes, a computer might develop the following decision tree from the trail-error approach.

We could see that the tree made a decision at each node based on its condition and delivered data to the next two nodes correspondingly. For instance, at the root node, circles and eclipses were sent to the ‘It’s not a square’ block as a shape without corners can’t be square; in the meantime, the rest are still candidates for squares and will be further assessed. In this example, the decision tree could identify squares in 4 simple steps, and the overall decision-making process is easy to understand and interpret.

We could see that the tree made a decision at each node based on its condition and delivered data to the next two nodes correspondingly. For instance, at the root node, circles and eclipses were sent to the ‘It’s not a square’ block as a shape without corners can’t be square; in the meantime, the rest are still candidates for squares and will be further assessed. In this example, the decision tree could identify squares in 4 simple steps, and the overall decision-making process is easy to understand and interpret.

But…

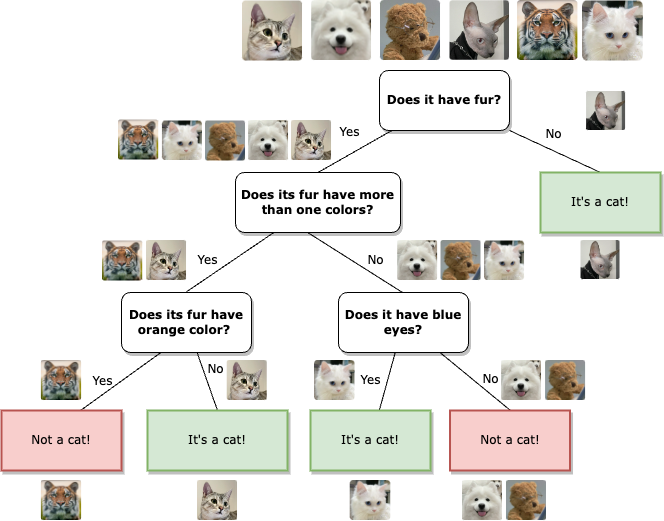

However, a decision tree has its limitations when dealing with tricker scenarios. Say now you have four pictures, and only the first two pictures are cats. Imagine you are a computer with no knowledge of animals, you might come up with the following tree to help you identify cats:

Although the criteria seem unreasonable based on common sense, we can see that it could perfectly identify all the cats in the given pictures. However, if we want you to identify cats from two new pictures: a pure white cat and a tiger, this decision tree is no longer valid as it is too simple.

Adding these two pictures to your knowledge, the decision could be updated by extending nodes and branches.

This new tree can now successfully identify the tiger as ‘not cat’ and the white cat as ‘cat’, excellent! But what will happen if we provide you with a picture of a cat with solid black fur and yellow eyes? Looks like we can’t do anything but expand the tree again.

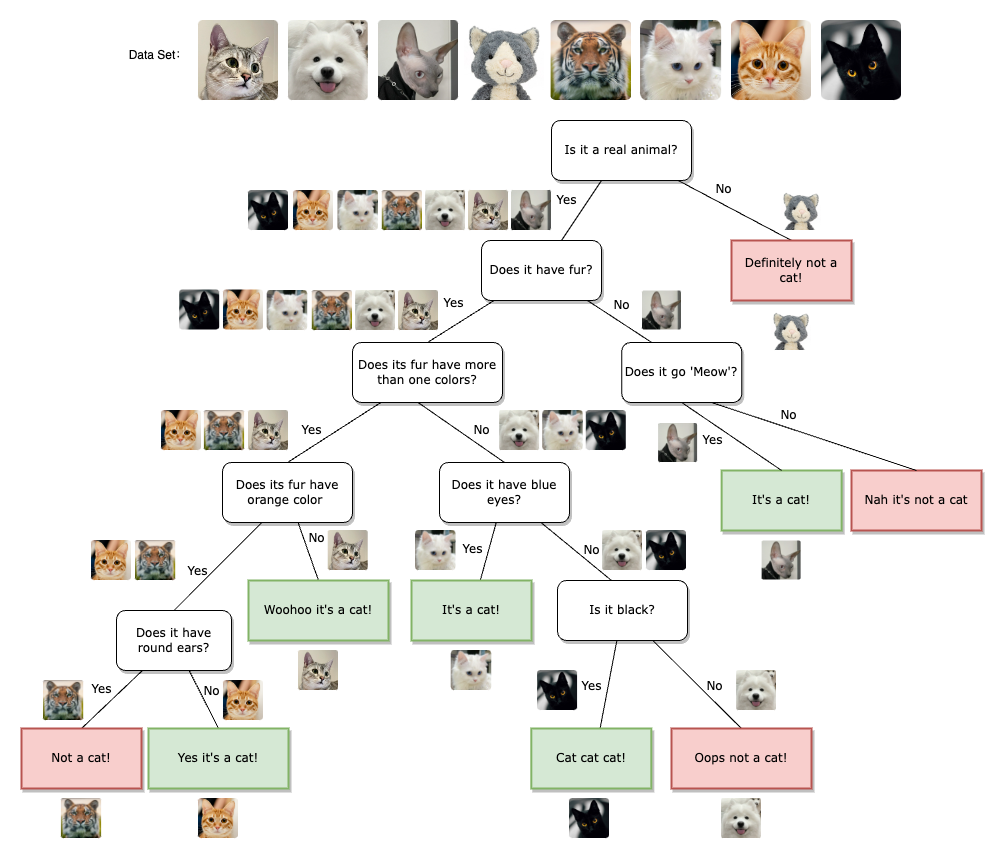

If we keep considering more animals or various cat breeds, we will need further expand the tree to accurately distinguish cats from other similar animals. We might end up with a tree that looks like this:

Even though we already have an enormous decision tree, it still has potential issues when identifying unseen pictures. If the expansion goes on, the tree could derive plenty of unreasonable criteria with less generality. This situation is called overfitting, where the decision tree performs so perfectly only for the given dataset that if you provide it with a new one it will likely make wrong predictions. Furthermore, a complicated tree will take a longer time to make a conclusion.

Random Forest

Instead of building one complicated decision tree with overwhelming criteria, random forest, as it sounds like, creates many different decision trees, and utilizes the power of collaboration to solve the problem.

That being said, the computer will decompose a complex problem into a few similar, but simpler, subproblems and generate a decision tree for each subproblem. The conclusion will be derived by taking the majority of the decisions made by individual decision trees. In this way, the random forest can effectively resolve the overfitting issue mentioned above and can reduce the risk of making a wrong decision due to only one tree’s imperfect algorithm.

Why random?

So why does it called “Random Forest”? Which part of the random forest is “random”? The randomness is actually from the step where we split the complex scenario into pieces. The computer will try different ways to split the problem and keep the splitting method that has the best performance.

Example…?

Back to our cats’ example, a computer may generate three trees: tree #1 learns how to distinguish cats based on their colour, tree #2 learns how to identify cats based on their body parts, and tree #3 learns to make decisions based on their faces. Let’s see what will happen if I provide the following picture to this random forest.

Tree #1 might give me the wrong conclusion, as the brown colour is also commonly seen among cats, but tree #2 might say that a cat does not have such a short tail and tree #3 might argue that a cat does not have such a long nose. Hence, by consulting all 3 trees, my random forest model will be able to give me the correct answer: it’s NOT a cat!

Conclusion

At this point, you have learned two popular machine-learning methods that have been widely used in different industries. I hope this post could spark your interest in data science and statistics. In the next post, we will talk about neural networks, a much more powerful and mysterious tool for prediction. Again, don’t be freaked out by the name! We will make sure to “divide and conquer” the problem!

Cites:

Adolfo, A. (2021, January 14). Introduction to the binary tree. Medium. Retrieved March 9, 2023, from https://adamadolfo8.medium.com/introduction-to-the-binary-tree-de2f393b1cae

Chang, S. (2020, June 30). Photo by Sam Chang on unsplash. Beautiful Free Images & Pictures. Retrieved March 9, 2023, from https://unsplash.com/photos/5-ckjYvTZQw

Hernandez, B. (2019, July 3). Homeschooling resources for learning about Dolphins. ThoughtCo. Retrieved March 9, 2023, from https://www.thoughtco.com/learning-about-dolphins-1834133

Lucy. (2023, January 20). White Cat Facts: 8 reasons why all white cats are awesome. The Happy Cat Site. Retrieved March 9, 2023, from https://www.thehappycatsite.com/white-cat/

Pruett, H. (2022, December 4). The 10 cutest frogs in the world. AZ Animals. Retrieved March 9, 2023, from https://a-z-animals.com/blog/the-10-cutest-frogs-in-the-world/

Stetson, L. (2021, November 24). Blue-Eyed Dog Names – nature, color & movie inspired names. Puppy In Training. Retrieved March 9, 2023, from https://puppyintraining.com/blue-eyed-dog-names/

TIERART Wild Animal Sanctuary. (n.d.). About Tigers – also called “Panthera tigris”. TIERART Wild Animal Sanctuary - a FOUR PAWS project. Retrieved March 9, 2023, from https://www.tierart.de/en-us/interesting-facts-about-animals/about-tigers-panthera-tigris

黑色黄眼猫的肖像. 黑色黄眼猫的肖像照片-正版商用图片0n7dt5-摄图新视界. (n.d.). Retrieved March 9, 2023, from https://xsj.699pic.com/tupian/0n7dt5.html

- Post title:Machine-learning for 10-years-old

- Post author:MZX

- Create time:2023-03-06 12:41:39

- Post link:https://podor.org/PodorBlog/2023/03/06/Machine-learning-for-10-years-old/

- Copyright Notice:All articles in this blog are licensed under BY-NC-SA unless stating additionally.